Archive for category Technical guides

NIST Special Publication 800-81 Revision: Sections 1-5

Posted by Scott Rose in Technical guides on June 21, 2012

Note: This is the second post in a series about the revision of NIST Special Publication (SP) 800-81: Secure Domain Name System (DNS) Deployment Guide.

In revising NIST SP 800-81, I am going through it section by section and seeing what parts need to be revised. This is in addition to an entirely new section with recommendations on recursive servers and validators.

Looking at Sections 1-5, there seems to be relatively little that needs changing, but let’s break it down by section:

Section 1 Introduction: Besides minor updates, bring it into conformance with the newly added section(s), not much to do here.

Section 2 Securing Domain Name System: Most of this section provides background on the DNS and breaks down the various components. Again, since there haven’t been any radical changes to the DNS protocol itself, there aren’t any significant changes. Maybe some additional text in this section about new gTLDs? Other than more information, it will not add anything significant.

Section 3 DNS Data and DNS Software: This section describes the basic roles (authoritative and caching severs). Should a subsection on validators be included? Are validators different enough to include it as a separate subsection or just include some discussion in the subsection covering resolvers?

Section 4 DNS Transactions: and message types (query, dynamic update, NOTIFY, etc.). This section describes the basic transactions in DNS (i.e., query/response, etc.). Since there hasn’t been a new DNS transaction type defined since the first version of this publication, no apparent edits are needed here.

Section 5 DNS Hosting Environment – Threats, Security Objectives and Protection Approaches: This section details some threats to the host systems used in DNS (i.e., servers, resolvers). Most of the section is still relevant, but might need some updating to the sub-section on resolvers to address validation. The discussion is high-level, so trends like virtualization do not need to be discussed, but may be included if there are valid concerns.

As before, comments and/or opinions on the questions above, post them below. They will be considered as the SP 800-81 revision process continues.

The views presented here are those of the author and do not necessarily represent the views or policies of NIST.

New Revision of NIST Special Publication (SP) 800-81 Under Way

Posted by Scott Rose in Technical guides on June 15, 2012

This inaugural post is the first in a series documenting the update of NIST SP 800-81, Secure Domain Name System (DNS) Deployment Guide. The current version (SP 800-81r1) was published in April 2010. Since that date, DNSSEC deployment Internet-wide had matured and advanced to the point where a new revision is justified.

The goal of the new revision of NIST SP 800-81 is to incorporate updates to recommendations based on currently deployment experiences. There have also been recent developments in specification of new cryptographic algorithms for use with DNSSEC (such as Elliptic Curve). The new revision will add discussion on new crypto algorithms as well as update the existing recommendations for DNS and DNSSEC operation.

We are also planning a new section of the document to provide guidance and recommendations for recursive DNS servers and validators. With DNSSEC deployment on the rise, more enterprises (and even ISPs) are configuring DNSSEC validation. Validation is also a planned required control in the upcoming revision of FISMA (NIST SP 800-53r4), so the new sections will be timely.

The plan is to have regular updates on this blog as the draft revision progresses. This is also one of the forums to discuss the revision during the process. As with all NIST Special Publications, there will be a draft document posted with an official comment period; this blog is simply an attempt to solicit input before the initial public draft is released for public comment.

The views presented here are those of the author and do not necessarily represent the views or policies of NIST.

A validating recursive resolver on a $70 home router

Posted by Bob Novas in Adoption, DNSSEC, Technical guides on March 15, 2012

As an experiment, I installed a validating recursive resolver on a home/SOHO router. I also set the router to advertise this resolver in DHCP as the DNS server for the LAN served by the router. The experiment was a resounding success — the clients on the router LAN only receive Secure or Provably Insecure DNS responses and do not receive Bogus responses.

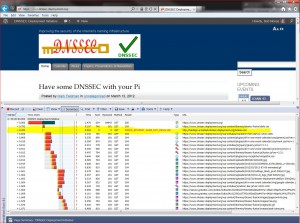

I was able to verify this by navigating to a bogus addresses. For example, here is a screen shot of navigating to a domain with a bogus signature (http://bogussig.dnssec.tjeb.nl). If you are on a non-validating network, you will be able to resolve this link. If your network is validating, however, you should get an error if you click the link.

The HttpWatch browser plugin shows that the DNS query for this address very quickly returns an error.

So, how did I do this? I started with a Buffalo WZR-HP-G300NH v2 router, which I purchased online for just under US$70. The router runs a customized version of the DD-WRT Open Source software out of the box. The router has 32MB of NVRAM and 64MB of RAM. The basic configuration with an Unbound recursive resolver requires about 10MB of NVRAM, so this router has more than enough storage.

A few words of caution. Not all routers are suitable — you need to have a router that is supported by OpenWRT and the router needs to have enough flash memory. Also, you may lose any special features that are included in the manufacturer’s firmware. For the router I used, I lost the “movie engine” feature and the ability to support a file system on the router’s USB port. I could have loaded more packages from OpenWRT to support a file system. Finally, there is a risk that you will brick the router — i.e., turn it into a paperweight. You’ll need some level of expertise with technology and a platform that supports telnet, ssh and scp (e.g., Cygwin on Windows, or a Mac or Linux box).

Because of the greater ease of configuration that it offers, I reflashed the router with the OpenWRT router software. Then I loaded and configured Unbound, a BSD-licensed recursive resolver and was up and running.

Here are the specifics of how I accomplished this:

- I downloaded the right firmware image for the router from the OpenWRT nightly build site. For the Buffalo WZR-HP-G300NH2 router, I used this build for the squashfs file system for a system upgrade. The system upgrade is because I was going from a DD-WRT build to an OpenWRT build. Other firmware images are available for this router that are specific to other circumstances. If you are not flashing this specific router, you will need to establish the right firmware to use for yourself.

- I connected my PC to a LAN port on the router. I set the PC to use an address on the same subnet as the router – in my case 192.168.1.10 as the router is at 192.168.1.1.

- I pointed my browser at the router, set a password, and logged in to the router’s Web UI.

- I navigated to the Administration/Services page and enabled the SSH daemon. At the same time I set up the authentication for SSH.

- From a terminal window on my PC, I copied the OpenWRT firmware to the router.

scp openwrt-ar71xx-generic-wzr-hp-g300nh2-squashfs-sysupgrade.bin [email protected]:/tmp

- I then logged into the router via ssh to flash OpenWRT onto the router in place of the supplied dd-wrt:

ssh [email protected] cd /tmp mtd -r write openwrt-ar71xx-wrz-hp-g300nh2-squashfs-sysupgrade.bin linux

- I waited for a good long while. When the red light stopped blinking, I had successfully flashed the router. It did not yet have a Web UI, but I was able to access it using telnet.

- I used telnet to acces the router with no username or password and set a password. This should disable telnet and enable ssh with that password (username root):

telnet 192.168.1.1 set a password when prompted

- I connected an Ethernet cable from my home LAN to the router’s WAN port (you could also connect the cable directly to your cable or DSL modem).

- I ssh’d into the router and loaded and installed the router’s Web UI, called luci:

ssh [email protected] opkg update opkg install luci ⁄etc⁄init.d⁄uhttpd enable ⁄etc⁄init.d⁄uhttpd start

- At this point I was able to login to the router’s Web UI by pointing my browser to 192.168.1.1. On my first login, I needed to set a username and password. I found that no matter what username I set, I always needed to use the username root to login to the router via ssh. It did use the password I set, however.

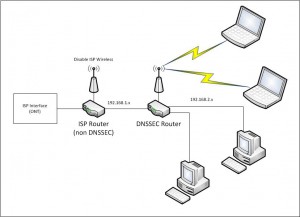

- I think the best thing to do next is to move the router’s LAN subnet to another subnet. My primary subnet (i.e., the one my ISP’s router provides and the one I have plugged into the router I’m working on’s WAN port) is 192.168.1.x. I logged in to the router’s Web UI and moved the router’s LAN to 192.168.2.1. This avoid IP address conflict between the two subnets. Since my PC is plugged into the LAN port of the router, I had to change my PC’s address to something like 192.168.2.10 so it can talk to the router once it’s on 192.168.2.1.

- I then ssh’d back into the router and installed unbound (This can also be done this using the Web UI from the System/Software page). I ssh’d into the router and used opkg to install Unbound:

ssh [email protected] opkg install unbound

- I enabled Unbound at startup by navigating a browser to the router’s System/Startup page and clicking on Unbound’s Enabled button to turn it to Disabled.

- I disabled dnsmasq’s (the default DHCP and DNS server on the router’s) DNS function by navigating the browser to the Network/DHCP and DNS/Advanced page and setting DNS Server Port to 0 (zero).

- Finally, from my ssh session, I told dnsmasq to advertise the router (unbound, really) as the DNS server in DHCP responses:

uci add_list dhcp.lan.dhcp_option="6,192.168.2.1" uci commit dhcp ⁄etc⁄init.d⁄dnsmasq restart

At this point, my router was up and running, dnsmasq’s DHCP was advertising the router as the DNS server, and Unbound was running as a recursive resolver on the router. All computers I put on the 192.168.2.1 router’s LAN that auto-configure using DHCP used this router’s unbound resolver for DNS, and are protected by DNSSEC. My network is configured as shown in the figure below.

One thing we noticed after running the router for a few days — be sure to leave NTP running on the router. In our case, we turned NTP off for some debugging and forgot to turn it back on (NTP is on the OpenWRT System/System page, under “Enable buildin NTP Server”.) After a few days, the router’s time was far enough off to cause validation to fail. Time is important for validation to work correctly because DNSSEC signatures have inception and expiration times.

We plan on doing some benchmarking of Unbound’s performance on this platform and on doing similar experiments with other routers. Email us at info @ dnssec-deployment.org with your experience doing this or any questions or feedback.

Some Fragments are more equal than other fragments

Posted by Olafur Gudmundsson in Adoption, DNSSEC, Technical guides on March 9, 2012

[Update of a post from January 28’th 2010 to fix broken links and add details]

My last post was about being able to receive fragmented DNS answers over UDP.

Last week (that is on January 20’th 2010) , I did a minor revision to a script of mine that checks to see if all of the name servers for a zone have the same SOA record and support EDNS0. This will indicate whether the servers are in sync and can serve DNSSEC. During testing of the updated script all the servers for .US timed out. This had never happened before, but .US turned on DNSSEC last month, so I started to investigate.

First I issued this query in dig format:

dig @a.gtld.biz US. SOA +dnssec +norec

Time-out confirms my script is reporting correctly. Then I tried again: (not asking for DNSSEC records)

dig @a.gtld.biz US. SOA +norec

This worked, the server is alive and the question has an answer, Then I tried getting the DNSSEC answer using TCP:

dig @a.gtld.biz US. SOA +norec +dnssec +tc

This gave me a 1558 byte answer (too large for one UDP packet) so fragmentation is needed to get over Ethernet links. But my system can handle big answers, so what’s wrong?

At this point I had number of questions:

- “What is NeuStar (the .US operator) doing?”

- “Are any other signed domains showing the same behavior?”

- “Are the fragments from these servers different from other fragments?”

First things first; “What is .US returning in their SOA answer?”

- In the answer section they give out the signed SOA record.

- In the authority section there is a signed NS set.So far, so good.

- In an additional section there are the glue A and AAAA records for the name servers, AND a signed DNSKEY set for the US. zone.

Conclusion: NeuStar is not doing anything wrong, but there is no need for the DNSKEY in the additional section. As this is turning up some fragmentation issues, I consider this a good thing.

At this point I moved on to the second question; “Is anyone else showing the same behavior?” All the signed TLD’s that I queried returned answers of less than 1500 bytes, so no fragmentation was taking place. I modified my script to ask for larger answers in order to force fragmentation. Instead of asking for type “SOA”, I ask for type “ANY”. For signed domains this should always give answers that are larger than 1500 bytes, since signed SOA, DNSKEY, NS and NSEC/NSEC3 are guaranteed to be in the answer and possibly others. Now things got interesting.

Almost all of the signed TLDs had one or more servers that timed out. .Org, for example, had 4 out of 6, .CH had 2, and .SE had one. So the issue is not isolated to anything that the .US operator is doing, but an indicator of something bigger.

I copied my script over to another machine I have and ran it there, and lo and behold, I got answers – no time-outs. The first machine is a FreeBSD-7.2 with PF firewall, the second machine is an old FreeBSD-4.12 with IPFW.

Now on to the next question; “Are the fragments different?” A quick tcpdump verified that answers were coming back. I fired up wireshark tool to do full packet capture. To make the capture smaller I activated the built-in DNS filter, and ran my script against the .ORG servers and the .US servers.

Looking at the DNS headers there was nothing different, and the UDP headers looked fine. On the other hand I noticed that the IP header flags field from the servers that timed out all had DF (Do Not Fragment) set on all packets, and on the first packet in the fragmentation sequence both the DF and MF (More Fragments) bits were set. MF tells IP that this is a fragmented upper layer packet and this is not the last packet. At this point I wondered if somehow this bit combination was causing problems in my IP stack or the firewall running on the host.

I disabled the PF firewall and suddenly the packets flowed through. After doing some searching on the Internet I discovered that I can tell PF to not drop these packets.

My old PF configuration had

scrub in all

The new one is

scrub in all no-df

Removing the scrub command also allows the packets to flow, but I do not recommend that solution.

I started asking around to find out what is causing the DF flag to be set on UDP packets, and the answer was “Linux is attempting MTU discovery”. I also noticed that for the last 2 years Bind releases have added code to attempt to force OS to not set DF on UDP packets. See file: lib/isc/unix/socket.c look for IP_DONTFRAG.

Conclusion: the tools I mentioned in the last post do not test to see if these malformed fragments are getting through. I have updated my perl script to ask for a large answer from an affected site, and to report on that. Please let me know if you have the same issue and what you did to fix it on your own systems and firewalls.

Educause factsheet highlights DNSSEC

Posted by Denise Graveline in Adoption, DNSSEC, Technical guides, Uncategorized on January 15, 2010

Educause has just published a two-page factsheet on 7 things you should know about DNSSEC, aimed at college and university information technology officials. Noting that “DNSSEC can be an important part of a broad-based cybersecurity strategy,” the fact sheet explains that security has special implications for institutions of higher education:

Educause has just published a two-page factsheet on 7 things you should know about DNSSEC, aimed at college and university information technology officials. Noting that “DNSSEC can be an important part of a broad-based cybersecurity strategy,” the fact sheet explains that security has special implications for institutions of higher education:

Colleges and universities are expected to be “good Internet citizens” and to lead by example in efforts to improve the public good. Because users tend to trust certain domains, including the .edu domain, more than others, expectations for the reliability of college and university websites are high. To the extent that institutions of higher education depend on their reputations, DNSSEC is an avenue to avoid some of the kinds of incidents that can damage a university’s stature.

New key management guidance from NIST

Posted by Denise Graveline in Technical guides on January 6, 2010

At the close of 2009, the U.S. National Institute of Standards and Technology issued an “Application-Specific Key Management Guide” as part 3 of its Special Publication 800-57, “Recommendation for Key Management.” Section 8 of the publication focuses on DNSSEC deployment issues for U.S. federal agencies, including authentication of DNS data and transactions, special considerations for NSEC3 and key sizes, and more.

Recent Comments